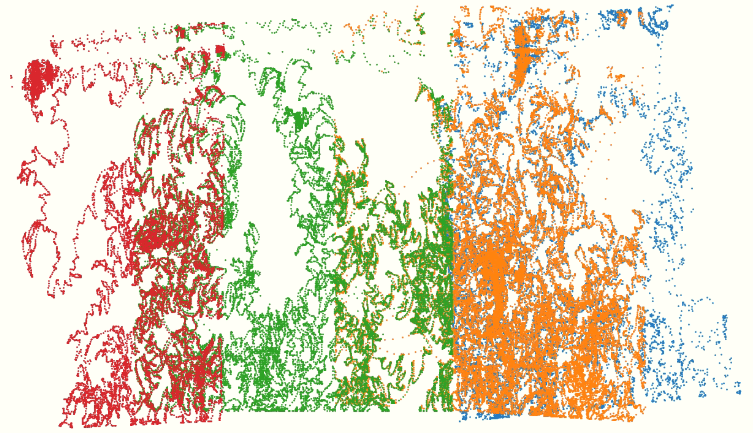

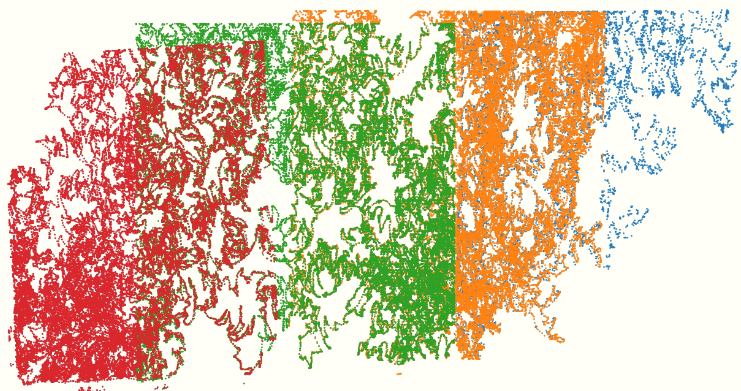

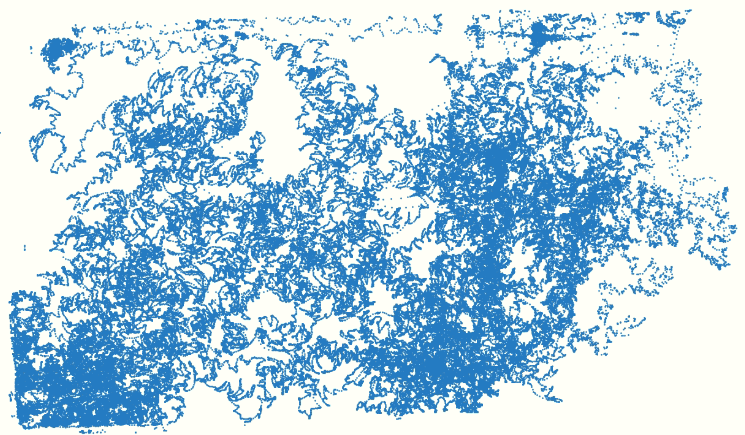

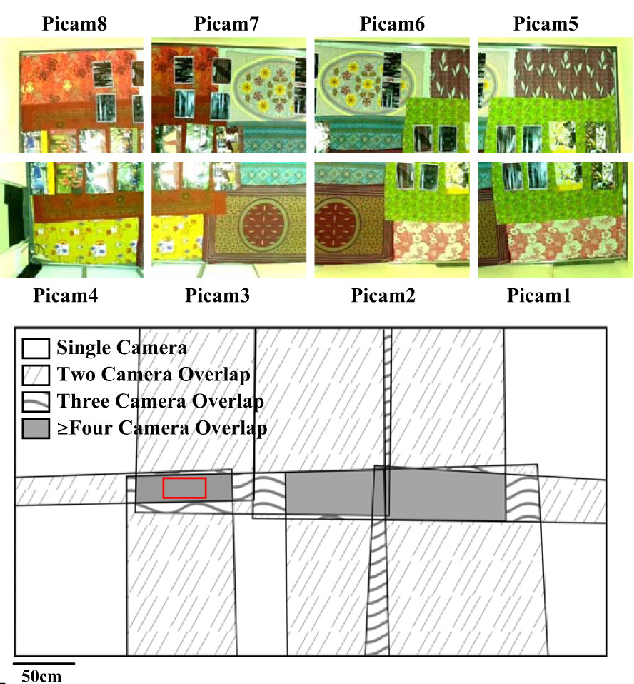

How do we go about aligning x,y position coordinates from eight different cameras covering different parts of the same environment? We are going to use a LED to calculate the perspective transformation between all pairs of cameras and align them in single world coordinate system. Let’s jump into the setup then. We have eight raspberry pi cameras covering a large room (16m2). This is how each camera’s field of view overlap region looks in 2D plane (Saxena et al. 2018):

Here are the steps used in calculating the aligned camera position:

-

Run position tracking script on each camera individually. ENSURE that when you are running position tracking, you remove outside regions from each camera FOV to avoid reflection. Reflections can cause error in homography estimation. Summing it up, define the ROI on each camera such that no reflection zones are present.

-

Load the position data and find unoccupied frames in each camera. To begin with, select position data and unoccupied frame data from any two cameras (say camera 2, 3).

-

Find frames where LED is visbile in both the cameras and store the intersecting X,Y position for each of them in an array.

-

Use the intersecting X,Y position to create source camera (camera2) and destination camera coordinates (camera3). These intersecting points are going to serve the same function as the keypoints detected using feature transformation. NOTE that the source camera coordinates will be transformed to destination camera coordinates reference frame.

-

Apply RANSAC algorithm on intersecting X,Y coordinates to remove geometrically inconsistent matches and improve homography estimation (also nice way to remove noisy reflection data).

-

Find homography matrix using the intersecting X,Y coordinates between the two cameras.

-

Using the homograhy matrix, perform the perspective transformation to transform source point (camera 2) to destination point (camera 3) coordinate system such that they are now aligned.

-

The merged camera coordinates between the two merged_cam23_X, merged_cam23_Y is equal to the mean of transformed X and Y position of the two cameras.

-

Generate the aligned position data for one half of the merged_cam1234_posX, merged_cam1234_posY room by repeating the above steps with the merged camera position and the next camera position (say camera 1) followed by the same steps with the next camera (camera 4).

-

Rerun the above steps to get aligned position data for the other half of the room merged_cam5678_posX, merged_cam5678_posY

-

Find the intersecting coordinates, followed by calculating the homography matrix and perspective transformation between one half and other half of the camera coordinates to merge them into single merged coordinates merged_allcams_posX, merged_allcams_posY.

-

Finally, save all the processed data into a matfile

WARNING: The homography estimation vary as the function of number of intersecting coordinates between cameras. Less number of intersecting coordinates will lead to incorrect estimation. Although once the homography matrices are estimated, you can use them for data collected across multiple days as long the cameras are not moved.

The script is available HERE